revenge porn

Top stories

You Thought Face Swap Technology Was Cute Until These Internet Trolls Got a Hold of It

Using freely available AI software, internet lurkers create fake celebrity sex tapes that have troubling legal implications.

Something about Aubrey Plaza’s sex tape seems a little off. Whenever she opens her mouth, her jawline turns into a mess of large pixels. At other times, her face looks mildly swollen, as if it’s too big for her head. There are a few points during the film where Plaza’s face literally stretches off her head before quickly righting itself.

Other celebrities, from Taylor Swift to Emma Watson to Lucy Liu, have had similar technical problems with their own sex tapes; in some cases, the actress’s head might be an entirely different shade from the body it’s attached to, almost as if the two pieces belong to two different people — and that’s because they do. These star-studded clips known as deepfakes (a portmanteau of “deep learning” and fake”) use machine learning to stitch the faces of celebrities onto the bodies of porn stars to make these short, explicit scenes.

These videos first hit mainstream attention this past December, when the Redditor that pioneered this AI-assisted porn technique went public about his process. In an interview with Motherboard, he revealed that he’d made faux smut of actresses like Gal Gadot and Scarlett Johansson using nothing more than a home computer fitted with a basic, open-source machine learning program. The way he described it, anyone with nascent coding knowledge and some free time could easily make their own kinda-convincing celebrity porn.

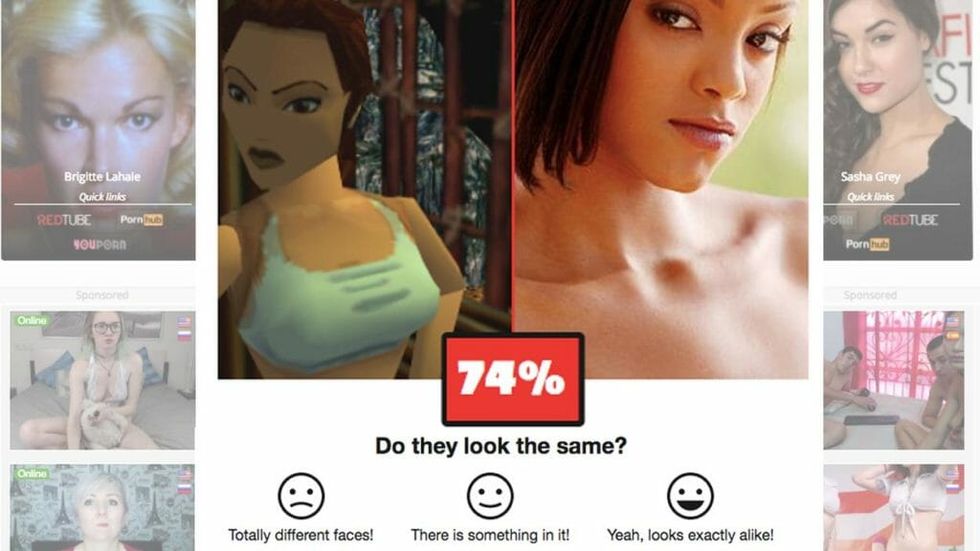

Creating these deepfakes is easy enough. The first step involves finding enough pictures of your movie star to make the video look realistic. This can’t be done with one or two photos ripped from their Instagram feed — to get enough pictures to make the video look realistic, you’ll need to use a tool like DownAlbum to scour the internet and pull all of this person’s publicly available photos to capture what their face looks like from all different angles, expressions, and lighting conditions. The tricky part is finding a porn performer to project this face onto. To achieve a seamless, realistic looking result, both actors should look as similar as possible — similar face shape, similar skin tone, and similar hair color, and so on. Many deepfakers use facial recognition software to do this; the program determines the particular facial features in one photograph, and compares information about those features against a database with thousands of porn stars to find the perfect match.

While this trend might seems confined to an operation of home-grown celebrity sex tapes, it’s clear that this software can be used for more sinister ends. Think about it — the programming barrier to making these videos is virtually non-existent. If a would-be blackmailer wanted to create revenge porn or harass someone, all they’d need are pictures of their face to place someone, unwittingly, into an explicit video.

In fact, people have already been planning this. Users on Discord, a gamer-friendly group chat platform, have shared conversations about creating deepfake videos using the faces of friends, crushes and exes instead of celebrities. Some of these users may well have blackmail on their minds. And for those that do find themselves victimized by a deepfaker, there’s not much they can do — current laws haven’t quite caught up with the tech. Even laws devoted to revenge porn can’t quite protect the victims that are mashed up into faux pornography against their will, because unlike a typical sex tape or nude photos fetched from the cloud, this material isn’t really them, per se — it might be their face, but it’s on someone else’s body.

With no legal recourse, the responsibility to protect the people involved falls onto the websites that host these videos. Sites like Twitter and Pornhub, one of the largest streaming porn providers online, have followed suit. Both cited issues of consent — Pornhub equated these videos to revenge porn. Reddit, the platform that initially spawned deepfakes as a genre, was the latest to ban the content from its site, classifying it as “involuntary pornography.” It shut down the deepfakes subreddit which had bloomed to 90,000 members. Fearing a shutdown, some of these users flocked to alternate Russian social media sites ok.ru, where they uploaded their videos to prevent them from being lost during the siege.

Unfortunately, it’s likely that deepfakers will continue to leap from platform to platform with their content in tow, driving themselves into the farthest, grimiest corners of the internet. We may be able to push them out of town, but it’s unlikely we’ll be able to eradicate them altogether.

SECONDNEXUS

SECONDNEXUS percolately

percolately georgetakei

georgetakei comicsands

comicsands George's Reads

George's Reads