In television and movies, there's been no shortage of stories depicting a dystopian future controlled by artificial intelligence. It’s a popular entertainment trope—artificially intelligent robots turn evil and chaos ensues. The recurring plot device is seen in movies and television shows everywhere, ranging from the Terminator to Blade Runner.

Now, in a eerily similar plot twist, researchers have encouraged an actual A.I. algorithm to embrace evil. The scientists are training the A.I., called Norman, to become a legitimate, verifiable psychopath with the help of Reddit. Norman is named after Anthony Perkins’ character in Psycho.

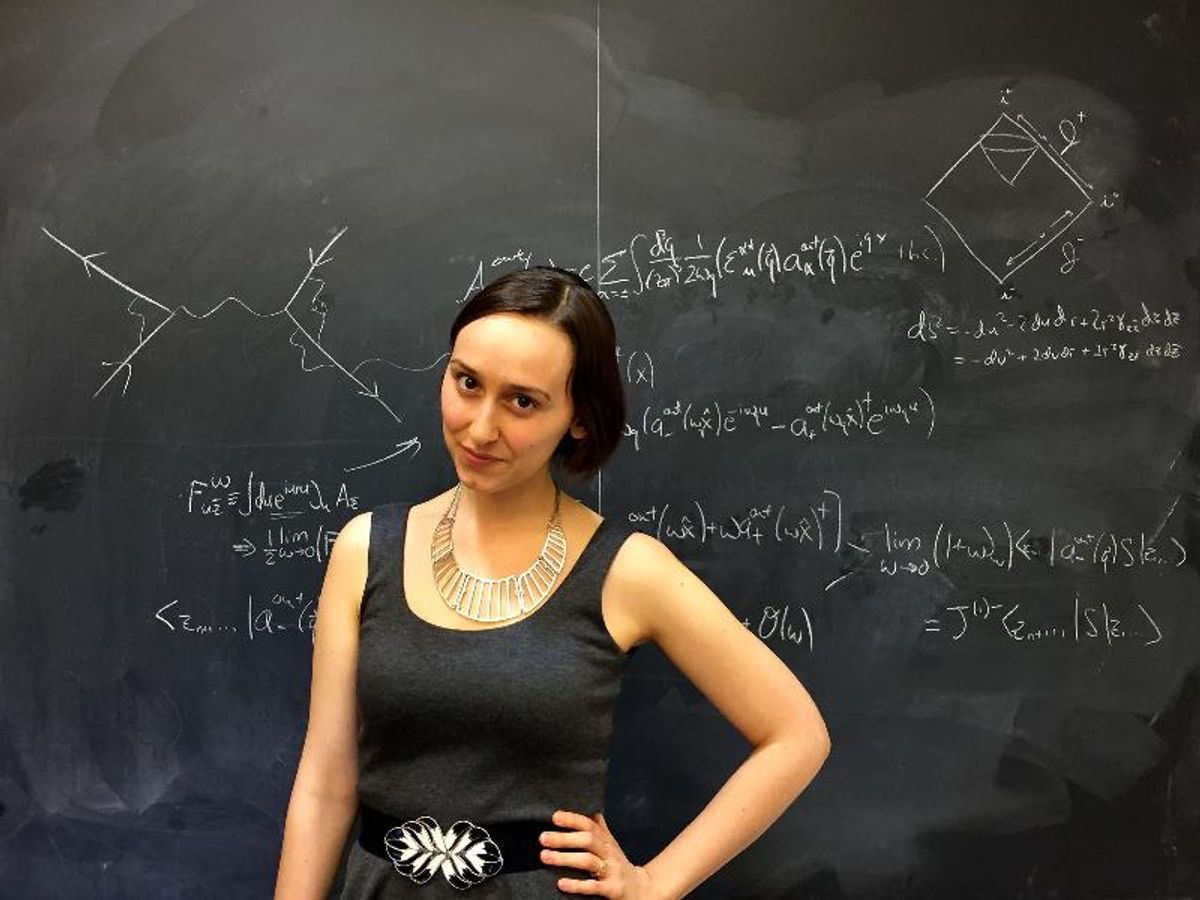

The scientists, from the Massachusetts Institute of Technology, bombarded the artificial intelligence with violent and disturbing images originally found on Reddit. Norman was then given Rorschach inkblot tests, to determine how the images had impacted its development. The results demonstrated that Norman had become a downright, verifiable psychopath.

Norman was presented with a series of images, used in many standard iterations of the Rorschach tests. Norman’s interpretations of the ink blots were compared with those from another A.I.—one that had not been exposed to violent images. In one test, the standard A.I. saw a vase, whereas Norman saw a man shot dead. When the standard A.I. reported seeing a man holding an umbrella, Norman saw a man being shot dead in front of his wife. When the standard A.I. saw a couple standing together, Norman saw a pregnant woman falling to her death off a building.

According to the researchers, the constant onslaught of disturbing images damaged Norman’s ability to engage with empathy and logic.

The purpose of this study was to demonstrate that machine learning is significantly influenced by its method of input. In other words, artificial intelligence can be trained to develop biases based on the kind of data being fed to it.

“When people say that A.I. algorithms can be biased and unfair, the culprit is often not the algorithm itself, but the biased data that was fed to it,” the researchers write.

The trend toward machine learning being tainted by human social prejudices has only increased in recent years. Social bias has now developed into algorithm bias. Those algorithms now exist as mirrors that reflect the prejudices, behaviors and biases of society as a whole.

"2017, perhaps, was a watershed year, and I predict that in the next year or two the issue is only going to continue to increase in importance," said Arvind Narayanan, an assistant professor of computer science at Princeton and data privacy expert."What has changed is the realization that these aren't specific exceptions of racial and gender bias. It's almost definitional that machine learning is going to pick up and perhaps amplify existing human biases. The issues are inescapable."

Currently, Google is one company that is attempting to steer the use of artificial intelligence towards good. In a statement released to the public, CEO Sundar Pichai, it is clear that Google intends to “do no evil” in its use and further development of artificial intelligence.

“Beyond our products, we're using A.I. to help people tackle urgent problems. A pair of high school students are building A.I.-powered sensors to predict the risk of wildfires. Farmers are using it to monitor the health of their herds. Doctors are starting to use A.I. to help diagnose cancer and prevent blindness. These clear benefits are why Google invests heavily in A.I. research and development, and makes A.I. technologies widely available to others via our tools and open-source code.”

Essentially, whether an A.I. turns out to be a psychopath is largely due to the biased information that it receives from its human creators. After all, psychopathy is a legitimate possibility of the human condition. Whether or not an artificial intelligence turns out to be good or evil depends heavily on the humans who created it.

SECONDNEXUS

SECONDNEXUS percolately

percolately georgetakei

georgetakei comicsands

comicsands George's Reads

George's Reads